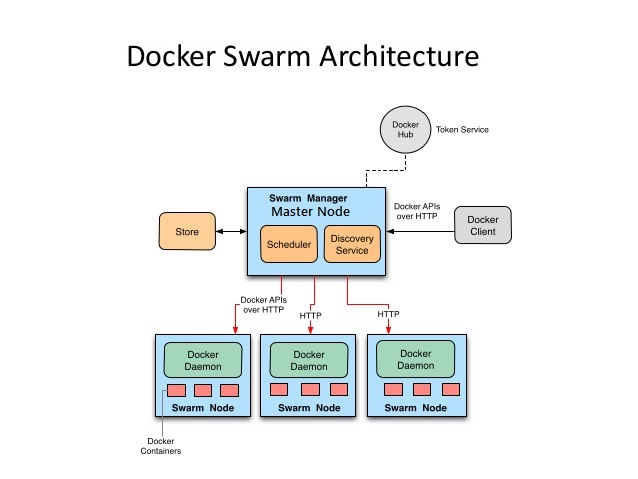

Docker Swarm is a native clustering for Docker. The best part is that it exposes standard Docker API meaning that any tool that you used to communicate with Docker (Docker CLI, Docker Compose, Dokku, Krane, and so on) can work equally well with Docker Swarm. That in itself is both an advantage and a disadvantage at the same time. Being able to use familiar tools of your own choosing is great but for the same reasons we are bound by the limitations of Docker API. If the API doesn’t support something, there is no way around it through Swarm API and some clever tricks need to be performed.

Install Docker Swarm and configure cluster is easy, straightforward and flexible. All we have to do is install one of the service discovery tools and run the swarm container on all nodes. The first step to creating a swarm on your network is to pull the Docker Swarm image. Then, using Docker, you configure the swarm manager and all the nodes to run Docker Swarm.

This method requires that you:

- open a TCP port on each node for communication with the swarm manager

- install Docker on each node

- create and manage TLS certificates to secure your swarm

How to install docker swarm and configure cluster

Install Docker on all the nodes and start with docker API. Use the following command to start it. This will be better to run from screen. I have used 3 node servers in my environment.

Master/node1 : ip-10-0-3-227

node2 : ip-10-0-3-226

node3 : ip-10-0-3-228

Login your all servers and start docker with API.

#docker -H tcp://0.0.0.0:2375 -d &

Install Docker swarm on the master node and create a swarm token using the following command.

[root@ip-10-0-3-227 ~]# docker -H tcp://10.0.3.227:2375 run --rm swarm create f63707621771250dc3925b8f4f6027ae

Note down this swarm token generated by the above command as you need it for the entire cluster set up.

Now login all your node servers and execute the following following to join with docker swarm.

Node1

Syntax Example

docker -H tcp://<node_1_ip>:2375 run -d swarm join –addr=<node1_ip>:2375 token://<cluster_token>

[root@ip-10-0-3-226 ~]#docker -H tcp://10.0.3.226:2375 run -d swarm join --addr=10.0.3.226:2375 token://f63707621771250dc3925b8f4f6027ae Unable to find image 'swarm:latest' locally latest: Pulling from docker.io/swarm ff560331264c: Pull complete d820e8bd65b2: Pull complete 8d00f520df22: Pull complete e006ebc1de3a: Pull complete 7390274120a7: Pull complete 0036abe904ed: Pull complete bd420ed092aa: Pull complete 8db3c7d27267: Pull complete docker.io/swarm:latest: The image you are pulling has been verified. Important: image verification is a tech preview feature and should not be relied on to provide security. Digest: sha256:e72c009813e43c68e01019df9d481e3009f41a26a4cad897a3b832100398459b Status: Downloaded newer image for docker.io/swarm:latest d04d00d5afacc37f290b92ed01658eca147c5510533d9cb0a0dfc1aa20edfcef

Node2

[root@ip-10-0-3-228 ~]# docker -H tcp://10.0.3.228:2375 run -d swarm join --addr=10.0.3.228:2375

Verify the swarm setup on your node server using the following command.

[root@ip-10-0-3-226 ~]# docker -H tcp://10.0.3.226:2375 ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d04d00d5afac swarm "/swarm join --addr= 2 minutes ago Up 2 minutes 2375/tcp

sleepy_engelbart

Replace the ip address and check with all the node servers.

Now try to join all the nodes to the cluster, set up a swarm manager on the swarm master node using the following command.

[root@ip-10-0-3-227 ~]# docker -H tcp://10.0.3.227:2375 run -d -p 5000:5000 swarm manage token://f63707621771250dc3925b8f4f6027ae

To list all the nodes in the cluster, execute the following Docker command from the docker client node.

[root@ip-10-0-3-227 ~]# docker -H tcp://10.0.3.227:2375 run --rm swarm list token://f63707621771250dc3925b8f4f6027ae

10.0.3.227:2375

10.0.3.226:2375

10.0.3.228:2375

Execute the following command from the client and it will show the node server details.

Syntax

docker -H tcp://<node_ip>:2375 info

[root@ip-10-0-3-227 ~]#docker -H tcp://10.0.3.226:2375 info

Next test your cluster set up by deploying a container onto the cluster. For example, Run a test busybox container from the docker client using the following command.

root@ip-10-0-3-227 ~]# docker -H tcp://10.0.3.227:2375 run -dt --name swarm-test busybox /bin/sh

Now list the running docker container using the following command.

[root@ip-10-0-3-227 ~]# docker -H tcp://10.0.3.227:2375 ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6aaec7894903 busybox "/bin/sh" 2 hours ago Up 2 hours swarm-test

7d1e74741eb1 swarm "/swarm manage token 2 hours ago Up 2 hours 2375/tcp, 0.0.0.0:5000->5000/tcp goofy_lalande

f0b654832976 swarm "/swarm join --addr= 2 hours ago Up 2 hours 2375/tcp sharp_carson

That it. This is the steps to install docker swarm and configure cluster.